The conversation you and your patients have during a consultation is personal, tailored and specific for your patient and their particular conditions and situation. You share a huge amount of invaluable information and knowledge, tailored for the individual. For most patients, their understanding and motivation at the time will be good. Despite best intentions however, this rapidly reduces as soon as they leave your surgery and are distracted by everything else going on in their life.

Dental Audio Notes allows you to provide patients with an audio recording to listen to again and reinforce their learning. Some patients may prefer to read, rather than listen to the conversation. This is why DAN includes an automated transcription feature.

When it comes to transcription, there are two options; manual, or automated. With DAN you can use either. Each has their strengths and weaknesses. Automated transcription is rapid, secure, easy to use and low cost but is not as accurate as manual transcription. Manual transcription is as accurate as possible, but needs a person to listen and transcribe increasing time and cost, and you will need to be mindful of privacy.

This blog post lays out my perspective on transcription and automatic speech recognition with a shallow dive into the technology.

Although the increase in the use of voice to interact with phones, computers or home assistants is phenomenal, the underlying technology of automatic speech recognition (ASR) needs a little more time to mature to provide accurate transcriptions suitable for the dental, medical and healthcare domain. You may notice some unexpected results using an automated transcription service, including that provided by DAN.

If you wish to have high accuracy, use a manual transcription service that will guarantee 99% accuracy. You can always create a download link in DAN to share the audio with a transcription provider if a patient agrees. You may need to include this relationship in your data and privacy policy you have with your patients. Transcription service providers in the UK will charge in the region of £0.55/minute of audio, offer 24hour turnaround time and guarantee 99% accuracy. This is a low cost with a bit of admin from you or your team to really help some patients and support their decision to pursue a treatment. It is fairly common practice for medical consultants to record their notes and use a manual transcription service to provide transcripts for the next day.

When it comes to automatic speech recognition, an accuracy considered good is above the 85% mark and over 90% would be considered excellent. Accuracy is likely reduced in the medical and healthcare domain as the clinical language uses specific words with a significantly more Latin influence. Further, these words have very specific, clinical meaning and are likely the most important words of your discussion with the patient. This AWS Machine Learning Blog1 demonstrates using AWS Transcribe how the transcription accuracy of MIT biology lectures had a baseline of 88% accuracy and with work could be improved to just over 90%. Published recordings of MIT lectures are of relatively high audio quality with little background noise and delivered by a professional orator using clear, steady speech. This is not particularly reflective of a two way conversation in a surgery environment.

Accuracy is often measured by the word error rate (WER), where the number of words that need to be corrected are divided by the number of words that the speaker originally said. An accuracy percentage is then achieved by (1 - WER) * 100. Assuming your consultation is 5 minutes long and you speak at an average of 120 words per minute, a 90% accurate transcription will have 60 words that require correcting. I would want to read and check over that before providing it to a patient.

We are early to the market and we are looking forward to it improving to the point where it will become a very useful tool. As you have the original audio, future developments can be applied to historical records. In the meantime, we would recommend sending a download link to your preferred manual transcription service.

Your consultation with your patient contains special category personal information and has significant regulations including GDPR surrounding its handling and use. A significant benefit to automated speech recognition for the medical sector is that no third party needs to be involved in the transcription, ensuring that the discussion remains confidential and removing privacy concerns. If selecting a manual transcription service, you must ensure that they have a privacy policy suitable for handling highly sensitive special category personal information.

Ensuring the privacy and security of the data DAN manages on your behalf is of the utmost importance to us here at Dental Audio Notes. All automated transcriptions are encrypted within DAN and can only be viewed by your authenticated users. Data never leaves the country of origin, in our case, the UK.

Digital assistants such as Alexa and Siri have brought automated speech recognition to the public at scale. Their main focus is to recognise specific commands and act upon them and the speech must be converted into text to allow the command to be identified and fed into the action stage. This allows their Automatic Speech Recognition (ASR) engines to focus on isolated action words, rather than natural, conversational language. Further, when people interact with digital assistants, their speech will not be conversational in nature, but be slow and clear to help the ASR understand as best as possible.

Dictation is the conversion of speech into text in real time. The ASR engines behind dictation require a complete dictionary and language model as they are trying to accurately convert speech to text to present back to the user. Results can be very impressive. Similarly to when using a digital assistant however, to get good results, the speaker must be slow and clear, not conversational. If you are interested in trying to dictate your notes because it will be faster than typing, try using DAN to form your complete record, allowing your written notes to focus on being clinically useful.

Transcription is the conversion of long-form audio into text. It may be real-time, or from a batch source such as an audio file run at any time after a recording. Transcription and dictation will have very similar ASR engines. The significant difference is the nature of the audio supplied. Transcription is more likely to receive conversational audio, with multiple speakers, different accents, speed of talking, crossover between speakers and background noise. These all make it much more difficult for an ASR engine to produce accurate results.

The use of automated transcription is growing in business settings, transcribing meeting notes for example, especially with the recent increase in remote working. To make it work, meeting delegates are asked to speak clearly to have a more accurate transcription of their points and avoid the extra time needed to edit or write up. This changes the mood of the conversation, in a low impact setting. This is not something to pursue in your consultation with a patient - you need to be talking in the most effective way to align with the needs of your patient.

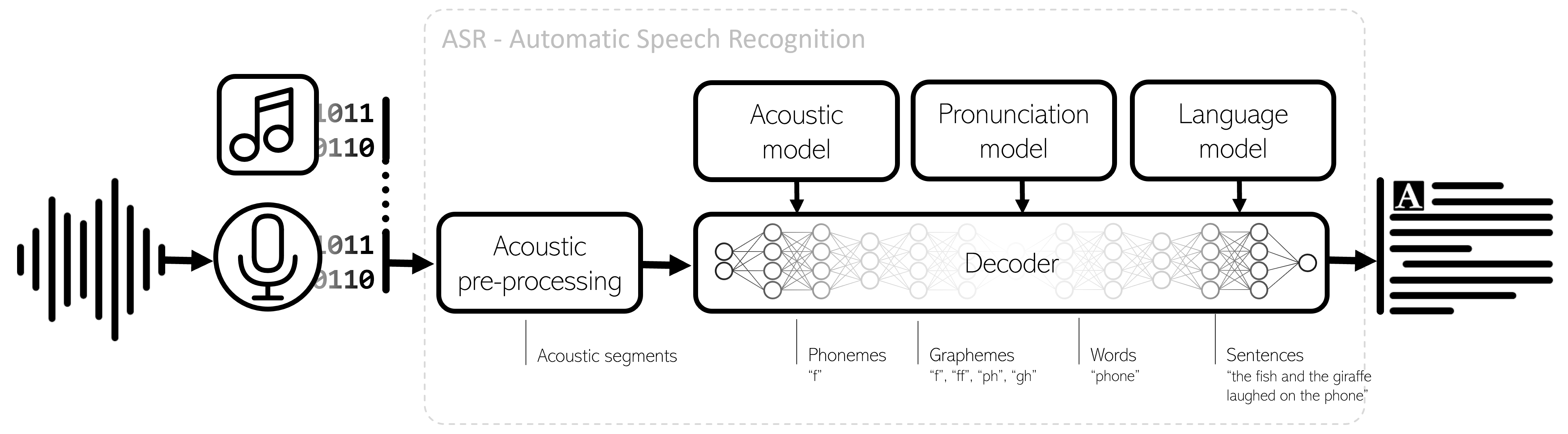

Speech recognition is almost subconscious to a person. Our brains and biological signal processing ability is incredible. It is very challenging for a computer to achieve the same results. The graphic below outlines the standard modules of an automatic speech recognition system.

Acoustic pre-processing identifies parts of the input containing speech and then transforms those parts into acoustic segments and sequences for analysis. The acoustic model breaks each segment into phonemes, the smallest unit of sound within speech. In the dictionary phonemes are represented using the International Phonetic Alphabet, for example “periodontitis” is represented by “/ˌpɛrɪədɒnˈtʌɪtɪs/”. The pronunciation model takes sequences of phonemes to identify the most likely words to be associated with the set. The language model then forms sentences by predicting the most likely sequence of these words. To actually turn speech into words, it’s not as simple as mapping discrete sounds, there has to be an understanding of the context as well. This where custom language models help increase the likelihood that a domain-specific word will be selected.

The decoder is the engine of the system where machine learning (ML), neural networks and artificial intelligence (AI) concepts are applied. In short, it is the set of algorithms that analyses the data by using the models to create hypotheses, presenting the outputs with the highest probability of being correct from one step to the next until the final output. The decoder determines the speed and efficiency of the process, aiming to only search through outputs that have a good enough chance of matching the input.

Artificial intelligence is the use of computers and algorithms to mimic the problem-solving and decision-making capabilities of the human mind. With respect to a transcription AI, it is trying to mimic the mind’s ability to separate and segment sound, recognise speech sounds and then understand what these sounds mean. With the mind as an archetype, neuroscience naturally influences the evolution of this field. The manifestation of AI is a collection of nodes responding to the outputs of other nodes in a system - a neural network.

The aim of a neural network is to infer suitable outputs given a set of inputs, reaching a conclusion based on evidence and reasoning. Further, this paper in Nature2 shows how neuroscience research drives improvements in ASR by segmenting the incoming audio not by set time intervals, but by the phase of oscillations, better aligning with how the mind segments sound.

The visual below is a great representation of how neural networks work. It takes an image of a number “7” drawn on a canvas of 28x28 pixels (784 total pixels). Each pixel forms an input node, a value between 0 and 1 based on shade. The nodes above a determined “activation” value are passed to the next layer where the next analysis step takes place. This process continues through each step until it reaches the output nodes where the most likely value is identified. The algorithm is tuned by weighting the connections between nodes, influencing the output value to better match expected values.

This model can only output a digit (0-9), and the null value if no output layer values are above the activation value. If the input was a letter, this model will still try to output what digit it thinks it received, but with a low probability confidence score. A different set of models and algorithms are needed for each use case.

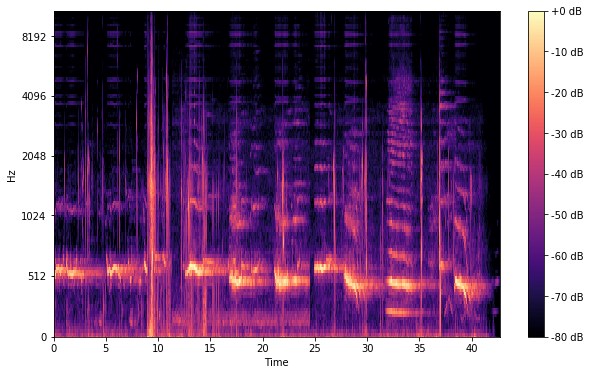

With respect to transcription, the number of outputs of the pronunciation model is the total count of known words within a language, and the output of the language model is theoretically every possible combination of multiple words. The input into the model is also far more complex than the simple example above. Below is a Mel Spectrogram of some speech which is a visual representation of the digital data from the acoustic pre-processing. The x-axis is time, the y-axis sound frequency and colour (z-axis) represents decibels (dB).

If you would like to learn more about neural networks, this YouTube video by 3Brown1Blue4 is an excellent resource.

The connections within neural networks become exponentially larger with increased nodes and layers. I previously mentioned that the connections between nodes in a neural network are weighted. It is not feasible to manually determine and set these values for a network of any size. Further, the number of layers can be very large too and what each layer actually represents can be difficult to specify, even if the end results are accurate. Machine learning is the process of “teaching” a model to set it’s own weightings and identify what layers and relationships are effective at narrowing in on a correct output. By providing inputs where the correct output is known, machine learning algorithms programmatically find the optimum set of weights to apply to each connection to better deliver correct results for the supplied data sets. Data used for this purpose is called “training data”. For transcription, this would be audio that has already been transcribed, most likely by a person.

Training data needs to be representative of the use case for the model. A model trained using English will have different weights to one trained with French. Similarly, the language used in a dental consultation differs greatly from conversational English with increased use of medical and latin terminology. Further, these words are likely some of the most important in your consultation and need to be accurately identified for transcription to be of any value.

ASR technology has come a huge way since the 1950’s when Bell Laboratories managed to isolate numbers from speech. The recent developments into machine learning and AI are making very rapid progress and the increasing adoption of transcription services across many domains will further fuel these developments. I believe that in the not too distant future, automatic speech recognition will make automated transcription accurate enough for our field. At DAN, we are technology agnostic allowing us to adopt alternative technology providers if proven to be more effective and beneficial for the task at hand. ASR is the gateway stage of many AI pipelines. Once a computer can analyse text, there are a huge host of potential applications to unlock new value for patients and dental health care practitioners. We continue to keep an interest in the development of this field.